In 1968 the film 2001 A Space Odyssey introduced the world to the supercomputer HAL with a mind and plan of its own. It seemed such advanced technology was just science fiction, or is it?

Today entrepreneurs, government researchers, and college professors are in a heated race around the world to develop true artificial intelligence. Many such Technologie AI prototypes are already part of our lives.

But what is artificial intelligence? How do we get to this point, and what new trend might come in the future?

A survey of the AI landscape shows that science fiction may be close to reality than we realize.

Learn about historical technology change and a new path forward where work isn’t necessary for everyone to be happier and have more fulfilling lives.

What is Artificial intelligence about and how does it work?

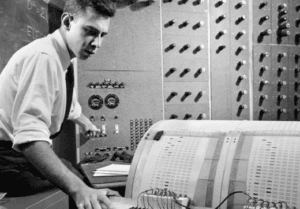

On July 7, 1958, Men surrounded a massive refrigerator-sized computer in the office US Weather Bureau in Washington, DC. They watch how the young pressor Frank Rosenblatt from Cornell University shows a computer a series of cards.

Each card has a black square on one side printed. The machine’s task is to identify which card has the mark on the right and which is on the left side. While it can’t tell the difference at first, Rosenblatt continues to use flashcards, and the accuracy improves. After 50 tries, it can identify the card’s orientation nearly perfectly.

Rosenblatt names the machine the Perceptron. At that time, it seemed primitive; it was actually an early precursor to the now-called artificial intelligence (AI). But during this age, it was dismissed as a novelty.

Rosenblatt names the machine the Perceptron. At that time, it seemed primitive; it was actually an early precursor to the now-called artificial intelligence (AI). But during this age, it was dismissed as a novelty.

Today Rosenblatt’s Perceptron and its successor, the Mark I, is a very early version of neural networks. Neural networks are computers that cause a process often called machine learning. At the most basic level, they work by analyzing a massive amount of data and identifying patterns. When the network finds more patterns, it improves its analytical algorithms to produce more accurate information.

Back in 1960, it was a slow process that involved a lot of trial and error. To train Mark I, scientists showed the computer paper with letters such as A, B, and C printed on each. The computer would guess which letter it saw with a series of calculations. The human marks if the guess were correct or incorrect.

The Mark I would update its calculation to guess more accurately next time. Scientists like Rosenblatt compared such a process with the human brain, arguing that each calculation was like a neuron. By connecting many calculations that adapt and update over time, a computer could learn similarly to humans. Rosenblatt called this connectionism.

In a 1969 book, Marvin Minsky criticized the concept of connections. He said that machines could never learn to scale up to solve more complex problems. His books were very influential because, through the 1970s and early 80s, the interest in neural network research declined.

Few institutions funded neural network research during this “AI Winter,” which stalled machine learning progress. But it didn’t stop completely.

What is deep learning?

Geoff Hinton was an outsider who obtained in the early 1970s a Ph.D. at the University of Edinburgh. In the height of AI, winter Hinton was one of the few who favored a connectionist approach to AI. After graduation, he struggled to find a job, and Hinton took jobs at different universities for the next decade.

All while continuing to refine his theories of machine learning. He believed that adding layers of competition, something he called deep learning, was a process that could unlock the full potential of neural networks.

Over the years, he won over a few doubters and made some slow progress.

Then in 2008, he met Li Deng, a computer scientist from Microsoft at NIPS, an AI Conference in Whistler, British Columbia. Deng was developing speech recognition software for Microsoft at the time. Hinton saw an opportunity and suggested deep learning neural networks could outperform any conventional approach. Deng was skeptical but interested, and the two decided to work together.

Deng and Hinot worked mostly in 2009 at the Microsoft research lab in Redmond, Washington, where they wrote a program. It uses machine learning models to analyze hundreds of hours of recorded speech. The program ran on a GPU processing chip that is normally used for computer games.

After weeks of progress, they got astounding results; the program could analyze the audio files and select individual words with amazing accuracy.

Soon other tech companies were explaining similar programs. The Google scientist Navdeep Jaitly used his own deep learning to archive even bad results with a program with only an 18% error rate. These early successes were a strong argument for the potential power of neural networks.

Researchers realized the same basic concept could be applied to analyze many other problems like image search or navigation of self-driving cars.

Within a few years, deep learning was one of the hottest technologies in Silicon Valley. Google, the ambitious company, led the charge by being Hinton’s research firm DNNreseach and other AI startup-like Deep MInd from London. But this was just the beginning. The upcoming year’s competition would only become more intense.

When the AI race started

In 2013 Clement Farabet had a quiet night when suddenly his phone rang. He answered, expecting to hear a friend. Instead, he heard Mark Zuckerberg. The call was surprisingly not completely unexpected as Farabet, a researcher at NYU’s deep cleaning lab, was contacted by various Facebook employees. They attempted to recruit him to join the social media company.

But Farabet was hesitant, but a personal appeal from the CEO piqued his interest.

Farabet wasn’t alone; many of his colleagues received similar offers. The tech giant of Silicon Valley was in a recruitment race. Each company was determined to be the industry leader in the field of AI.

In 2010 neural networks and deep learning were relatively new technologies. However, the ambitious entrepreneurs at Apple, Facebook, and Google were convinced that artificial intelligence is the future. While no one exactly knew how AI could make a profit, each company wanted to be the first to find out.

Google had a head start by buying DeepMInd. Still, Microsoft and Facebook were close behind, spending millions on hiring AI researchers.

Facebook wanted to use the cutting-edge neural network to optimize the company by making sense of the massive amount of data on its servers. It learned to identify faces translate languages or anticipate buying habits for targeted ads. Further, it could even operate a bot that could do tasks like messaging friends or placing orders. It could make the site come to life.

Google had similar plans for research. AI specialists like Anelia Angelova and Alex Krizhevsky used Google Street View data to train self-driving cars to navigate the complexity of real-world cities. Demis Hassabis designed a neural network that improved the energy efficiency of the millions of servers the company needed to operate.

The press hyped these projects as forward-thinking and potentially world-changing. But Nick Bostrom, an Oxford University philosopher, warned that advancement in AI could easily go sideways. He argued that super-intelligent machines could be unpredictable and make decisions that could put humanity at risk. However, such a warning didn’t slow the high investment made.

What is a neural network?

Go seems, at first glance, a simple game. Two players take turns placing black and white stones on a grid, and each tries to encircle the other. But in reality, Go is incredibly complex. The context has a vast number of potential paths that made it so unpredictable that no computer could beat the best human player until October 2015.

Go seems, at first glance, a simple game. Two players take turns placing black and white stones on a grid, and each tries to encircle the other. But in reality, Go is incredibly complex. The context has a vast number of potential paths that made it so unpredictable that no computer could beat the best human player until October 2015.

Google’s AI program Alpha Go took on Fan Hui, a top-ranked player. The neural net system trained by analyzing millions of games was unstoppable; it won five matches in a row. And a few months later even defeated the reigning human champion Lee Sedol.

Clearly, AI had a turning point, and as more scientists studied neural networks, the more powerful those networks became.

In the decades after Rosenblatt began experimenting with Perceptron, the capabilities of neural networks grew further and beyond. Two trends fueled this incredible leap:

- Computer processors that got a lot faster and cheaper than any modern chip can computer more calculations than earlier models.

- The data became an abundant resource, so the network could be trained on a large variety of information.

As a result, researchers could creatively apply machine learning principles to many different issues. Google Engineer Varun Gulshan and physician Lily Peng two scientists, made a plan to efficiently diagnose diabetic retinopathy. Using data from over 130,000 digital eye scans from India’s Aravind Eye Hospital, the duo trained a neural network to spot warning signs of disease.

After collecting data, their program could automatically analyze any patient eyes in seconds. It even was accurate 90% of the time and about as good as a trained doctor.

Similar projects can revolutionize the future of healthcare. Neural networks could be trained to analyze X-rays, CAT scans, MRIs, and other types of medical data. So they efficiently spot diseases and abnormalities. In time they could even discover patterns too subtle for humans to see.

How a Deep Fake is created

You may have already seen a video on social media like the one where Trump speaks fluent Mandarin. And it is not a bad overdub. The motion of his mouth a perfect sync with the syllables you hear. Even his body language matches the speech rhythm. But as you play this clip again and again, you find small glitches and inconsistencies. The video is fake but still very convincing.

It didn’t trick you this time, but with the AI technology progress, you might not be so confident in the future.

In the early 2010s, machine learning research focused on teaching computers to catch patterns in information. And Ai programs trained on the large image set to identify and sort pictures based on their content. But in 2014, Ian Goodfellow, a Google researcher, proposed a different idea. Is it possible for AI to generate completely new images?

Goodfellow designed the first generative adversarial network (GAN) that works by having two neural nets train each other. The first network generates images, while the other uses complex algorithms to judge accuracy. As the two networks swap information over and over, the new image becomes more true to life.

While faked images always existed, GANs made generating realistic renderings of any person or thing easier than ever. Quickly early adapters used the technology to create convincing videos of celebrities, politicians, and other public figures. While some of these, called “deep fakes,” is harmless fun, others use them to place people into pornographic images, which raises obvious red flags.

While faked images always existed, GANs made generating realistic renderings of any person or thing easier than ever. Quickly early adapters used the technology to create convincing videos of celebrities, politicians, and other public figures. While some of these, called “deep fakes,” is harmless fun, others use them to place people into pornographic images, which raises obvious red flags.

Deep fakes aren’t the only problem that AI research is facing. The critic also noted there is an issue with racial and gender bias. Both Google and Facebook facial recognition programs were trained on data that skews toward white males distorting their accuracy. Such findings made people concerned about AI’s potential to be oppressive.

When the government started using AI

In the fall of 2017, eight engineers at Clarifai, an AI research and development startup, were given an odd task. They were asked to create a neural network capable of identifying buildings, vehicles, and buildings the program should aim to work in a desert environment.

Hesitantly the engineers began work, but soon, disturbing rumors emerged. They weren’t developing tools for any regular client; it was the US Department of Defense. The neural network would help drones navigate. But unknowingly, they were part of a weapon program from the government.

After the news came out, the engineers immediately quit the project. However, this was just the beginning. AI is increasingly used in world politics.

Private companies weren’t the only organizations that wanted to explore the growing abilities of AI. Government is also increasingly aware of the transformative potential of machine learning. In China, the State Council has an ambitious plan to become the world leader in AI by 2030.

Similar to the United States government investing more money in developing AI systems, often for the military. In 2017 Google and the Defense Department entered discussions about a new multimillion partnership called Project Maven. An agreement would have Google AI teams develop neural networks to optimize Pentagon’s drone program.

These processes made the engineers uncomfortable, which made more than 3000 employees sign a petition to drop the contract. While the company ultimately declined, the Google executive board hasn’t ruled out more partnerships in the future.

You might remember the Cambridge Analytica scandal from Facebook in 2016, where private data from 50 Million profiles created misleading ad campaigns for Donald Trump. The platform’s moderation was a problem, and much fake news and radical propaganda were used.

In 2019 Zuckerberg testified to Congress his company would use AI to clean up harmful content. But the solution wasn’t perfect. Even the most advanced neural networks struggle to find nuances of political speech. Not only can AI produce new misinformation as fast as it can be moderated.

The reality is anyone who uses AI for good will always be in a concurrence arms race with bad actors.

The difference between human learning and deep learning

In May 2018, the Shoreline Amphitheatre in Mountain View, California, went wild. But the man on stage isn’t a rockstar wielding a guitar. It is Google Sundar Pichai holding a phone.

It is the I/O, the tech company’s yearly conference, where Pichai demonstrated the newest innovation, Google Assistant. With a neural net technology called WaveNet, the assistant can make phone calls with a realistic-sounding human voice. In the clip, Pichai shows how the AI program successfully reserved a restaurant. However, the woman at the cafe didn’t even realize she was speaking with a computer.

It is the I/O, the tech company’s yearly conference, where Pichai demonstrated the newest innovation, Google Assistant. With a neural net technology called WaveNet, the assistant can make phone calls with a realistic-sounding human voice. In the clip, Pichai shows how the AI program successfully reserved a restaurant. However, the woman at the cafe didn’t even realize she was speaking with a computer.

The new AI abilities wowed this crowd though not everyone was so impressed. A New York University psychology professor Gary Marcus rolled his way by Pichai’s demonstration. He is pessimistic about the potential of machine learning.

Google presented the AI assist with nearly human-level speech understanding and conversation. But Marcus thought the program only seemed impressive because it performed a particular and predictable task.

Marcus’ argument draws on a group thought called nativism. Nativists believe a huge part of human intelligence is hard-wired into our brains by evolution. It makes human learning fundamentally different from neural net deep learning. For example, a baby’s brain is so agile it can learn to identify an animal after showing one or two examples.

Indifference to perform the same task, a neural must be trained on millions of pictures. For nativists, this distinction explains why neural net AI has improved as fast as expected, especially in tasks like understanding language.

While Google Assistant’s AI can go through a basic rote conversation, it isn’t exactly cable of engaging in more complex discussions that come easily to an average person. Ai might be able to make a reservation, but it can’t understand a joke.

Of course, researchers work on overcoming this obstacle. Both Google and OpenAI Teams currently experiment with an approach called universal language modeling. These systems train a neural net to better understand the context of language and have shown some progress. However, if they will make great conversation partners is yet to be seen.

Is super-intelligent AI possible?

Google is one of the most successful companies in the world and is responsible for inventing and operating many servers that run the modern world. It also generates incredible wealth with tens of billions of dollars. So imagine if there were two or even 50 Googles. Could AI make this possible?

According to Ilya Sutskever, the chief scientist at OpenAI, it may be possible. It would be revolutionary if scientists could build artificial intelligence as capable and creative as the human mind. One super-intelligent computer could build another better version, and so on. Eventually, AI will take humanity beyond what we can imagine.

It is a dream, but is it realistic? Even the brightest mind in Silicon Valley aren’t entirely sure.

When Frank Rosenblatt introduced the Perceptron, there were skeptics but also optimists. Around the world, futurists and scientists make bold claims that computers will soon match or surpass humanity in technical ability and intellectual progress.

Despite the uneven speed of progress, man believes that humanlike or even superhuman intelligence called artificial general intelligence (AGI) is possible. In 2018 OpenAI updated its charter to develop AGI as a business goal of the company’s research. Shortly after the announcement, Microsoft invested over one billion dollars in research teams’ ambitious goals.

Exactly how AGI can be achieved is unclear, but research approaches the problem from different angles. Companies such as Google, Intel, and Nvidia are working on developing new processing chips specially designed for neural networks. The idea is supercharged hardware will allow networks to process enough data to overcome current barriers that machine learning programs face.

Geoff Hinton, one of the machines learning earliest boosters, is going a different path. His current research focuses on a tech called capsule networks. This experimental model more closely mirrors the human brain in function and structure. It still takes years before concrete comes to fruition.

By then, a completely new theory could come. With Ai, the future always stays uncertain.

Will AI take your job?

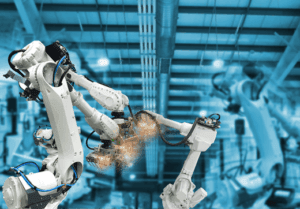

You may hear many unbelievable predictions about how technology will change the world. That our society will never be the same again, we will be redundant, and an army of robots will take our jobs.

Both optimists and pessimists agreed there needs to be a change of direction. But what should it look like? What exactly is automation, and what will it do to human society? And how can we embrace it to create a better world?

You probably heard about the term machines taking over. It is not hard to see where this term comes from, as every year has new technological innovations. But if computers and robots become smarter and smarter, will humans become unnecessary?

The reality isn’t quite as simple because machines never take all our jobs. Their effect on the labor market is more distinct. The fear of technological change isn’t new.

Centuries ago, at the start of the Industrial Revolution in Britain, weavers destroyed early machines. The reason was these people, known as Luddites, feared for their jobs. They had worried because the rapid technological change in their industry caused massive upheaval.

The change wasn’t all bad; while some workers suffered, others benefited. If a low-skilled worker learned how to use the new machines, his output increased enormously and, later also, his earnings.

New technology is often complementary as it replaces some workers. It makes other employees more productive. How? It helps them with one of the more difficult tasks.

As an example, an algorithm can process legal documents but can’t replace a lawyer. Instead, it frees up their time for more creative work like problem-solving, writing, and face-to-face client meeting.

This increased production is the second benefit of automation. Think about the country’s economy as a pie that everybody needs to share. Of course, machines change how the shares in this pie are disturbed. However, they also make the pie itself so much bigger.

For instance, automated teller machines are a good example; when they were first introduced, people feared that they completely replace bank staff.

But actually, in the last 30 years, the number of ATMs in the USA quadrupled. And at the same time, the number of bank tellers also increased by about 20 percent. While ATMs replaced tellers when it came to handing cash out, they also freed up humans to give financial advice and offer personalized support.

But actually, in the last 30 years, the number of ATMs in the USA quadrupled. And at the same time, the number of bank tellers also increased by about 20 percent. While ATMs replaced tellers when it came to handing cash out, they also freed up humans to give financial advice and offer personalized support.

The economy grew, and overall, bank demand and financial advice increased. Yes, the average number of tellers per bank dropped over the last few decades. But the number of banks where tellers could get a job rose by around 43%.

How AI will affect the job market?

The question is, whose jobs are machines taking? Are the people that work on assembly lines? Or cashiers at supermarkets? Maybe a brain surgeon should worry they might get replaced by robots?

The growth of technology will certainly affect everyone. But as recent trends show clues on which sectors of the economy are more likely to be automated.

In the last few decades, technology benefited highly skilled, educated workers more than their low-skilled neighbors. The reason is computers as between 1950 and 2000 their power increased by a factor of 10 billion.

It created a demand for highly trained workers who could operate new machines. When the demand grew, the supply also did, and more people learned to use computers. But then something interesting happened as the demand grew, and the wages of high-skilled began climbing.

In 2008 economics recorded an unprecedented income gap between college graduates and people who only completed high school.

Does this sound like technology always offers more benefits to well-educated people? Not really in the past was rather the other way around. Remeber the luddites in 18. Century England, where weaving was a high-skill job. But as mechanical looms were introduced, you no longer needed advanced training to make good cloth. Low-skilled workers got more rewards.

Economists boost both low and high-skilled jobs while the middle class might suffer as there are more cleaners and lawyers but fewer secretaries and salespeople.

An MIT economist trio has the theory routine tasks are easier to automate than non-routine work that depends on judgment, interpersonal skills, and complex manual work.

An MIT economist trio has the theory routine tasks are easier to automate than non-routine work that depends on judgment, interpersonal skills, and complex manual work.

Simple routine skills are explainable and broken down, and turned into algorithms. This makes it easy for computers, but they struggle with non-routine skills that are complex and harder to explain.

While for a long time, people believed such non-routine jobs were safe from automation because people couldn’t teach the machines to them, it started to change.

Can AI do non-routine tasks?

The ancient Greek poet Homer is famous for the Odyssey and The Iliad. However, he didn’t only write about heroes and battles. He also described what we now call artificial intelligence or AI. In The Iliad, Homer describes a driverless three-legged stool that comes to its owner when called, similar to today’s autonomous cars.

Homer likely didn’t have self-driving vehicles in mind when he told the story. But it has an important point: humans long dreamed about machines capable of autonomy. Recent breakthroughs are making those dreams real.

To understand AI better, let’s look at its history. The first attempt at artificial intelligence was in the mid-twentieth century when computing became more popular. Early Ai researchers wanted to replicate human thinking.

An example was a chess software development where they asked the grandmaster to explain their thought about the game. The engineers tried teaching those processes to the computer.

However, in the late 1980s, this process stalled because of the translation and identification of objects in chess. Early AI couldn’t beat a human by thinking like a human. So scientists realized they needed to change track as limiting humans thought could computers only get so far.

The next wave of AI research was a more approach. Scientists gave computers tasks that a softer should accomplish in whatever way possible, even if it didn’t make sense to humans. Instead of traditional chess or translation strategies, the new AI program used hundreds of data points and scanned them to find patterns.

As a result, AI research took a giant leap forward. In 1997 IBM’s Deep Blue beat the world chess champion, Garry Kasparov. AI isn’t just excelling at chess. Modern image identification can routinely outperform humans in competitions.

These advances were critical to understanding how automation affects the future of work. Economists thought that computers would never function without human guidance. But machines can now find nonhuman solutions to problems and tasks.

It suggests that machines someday can learn non-routine skills that were previously considered beyond their capabilities.

Which jobs can be automated?

A science fiction author, William Gibson, once said, “The future is here – it’s just not evenly distributed.” It is helpful to keep this line in mind when we discuss automation. When it comes to what computers can do, the future is here now. AI can outperform humans in a large number of tasks, from detecting liars to manufacturing prosthetic limbs.

But the jump from AI can do it to AI will do it is huge, and this gap is different for each country.

When technological capabilities increase, automation will transform every industry. Take a look at agriculture, where today’s farmers have driverless tractors, automatic sprayers, and facial recognition systems for cattle. In Japan, 90% of crop spraying is done by drones. Even tasks that require fine motor skills have been automated, like robots picking up oranges by shaking them off trees.

Industries that require more complex thinking, like law, finance, and medicine, have all software that can analyze more information than us. AI is great at finding out relevant patterns and past cases.

Even jobs that require emotions and feelings can be done by machines. Some facial recognition systems can already outperform humans when it comes to telling if a smile is real or not.

They are Social robots who can detect and react to human emotion and increasingly coming into health care which is expected to become a 67 billion industry. A humanoid machine called Pepper is used in Belgian hospitals. Its job is to greet patients and escort them through corridors and buildings.

While more tasks can be automated doesn’t mean all will be. Automation requirements and costs are different across regions and countries and don’t necessarily move at the same pace.

For instance, in Japan, there are a lot of elderly people who lack nurses. So hospitals focus on automating care work. In comparison, countries with younger populations and more people willing to take low-wage jobs don’t focus much on healthcare automation. There may even be political pressure to stay away from Robo-medics.

When will AI replace our jobs?

Finding a job isn’t much fun. So now, imagine you are unemployed because you have been automated. How can you look for a job if a machine replaces you? A challenge that most likely will affect millions of people.

Automation will expand the size of the economic pie, meaning it creates jobs to offset unemployment. Still, not all people can fill those new jobs as there are many obstacles along the way, like a mismatch of skills.

Most new jobs are high-skilled things like AI management specialty, where a low-skilled factory worker can’t really help.

A geographic mismatch can make you move hundreds of miles for the sake of a job. While the internet has made remote work more accessible, geography still matters. Only think about Silicon Valley, which attracts many tech companies so often founders move there to find talent and build new connections.

Such an obstacle is called friction by Economists. Scientists believe this will be only short-term and smooth out in the long run. But the structural change in the labor market won’t go away.

As technology boosts productivity and overall outputs, it will reach a point where it doesn’t need humans anymore. An example is cars; first, GPS helped Taxi drivers to find more efficient routes. Now driverless care might replace them entirely. Even as taxi demand increases, it doesn’t mean more jobs for humans because companies just produce more driverless cars.

But this change won’t happen overnight. We often overestimate technology’s effect in the short run and underestimate it in the long run.

These effects will be seen in decades and will continue to increase when AI gets smarter. The trend shows even though output increases, there still be less work for humans to do.

Why the rich get richer

For most of human history, we struggled for subsistence, a term introduced by economist John Maynard Keynes meaning that human society simply doesn’t produce enough for everyone to live on. It made the question of how to distribute resources an afterthought.

But today’s technology has manufactured enough for the entire world to live comfortably. The economic pie has grown. However, the problem is how should we divide it.

When you look at economic data, one thing has become clear in recent years the slice of the pie has become less equal.

Think about the stuff we own our capital which can be divided into two categories traditional and human capital. Traditional capital is stuff you can do, like equipment, intellectual property, or land. Human capital is the concept of all your skills and abilities.

Automation wouldn’t be a big deal if everyone had enough traditional capital. But most people control very little traditional capital. Instead, they use their human capital to generate wealth. The problem is when their job becomes automated, they lose human capital.

Data show before 1989 income growth of all Americans was steady. Between 1980 and 2014, things changed. Low earners enjoyed little income growth, but the 1 percent population who already earned the most saw their income skyrocket.

In wealthy countries all over the world, it is similar. Human capital isn’t the same for all but the highest-skilled worker.

There are some important takeaways here. One is that change in the future of work will lead to increasing inequality. The question is, How will society function when people no longer need to work?

How to solve the income problem

Normally most people had to work for our share of the economic pie. But what happens when automation replaces jobs? How can societies support people who have lost their job?

If the labor market won’t, another institution must step up with enough power, the state.

Most developed countries are already welfare states. But the welfare state also needs to change as it was originally designed to supplement the labor market. The fundamental principle is the people who work support those who don’t. Employment was the exception.

Though in the automated world, it will no longer be the case. The welfare state needs to be replaced by the Big state, an institution that understands there won’t be enough work for everybody.

The Big State can accomplish two primary goals: tax the ones who benefit from automation and redistribute income to those who are harmed by it.

It can tax workers like software developers or tech company managers. Also, collect the money for traditional capital owners, including land, machines, or property rights. The Big State can tax businesses, especially the ones that earn extra profit from the automation they use.

When the Big State gathers all this money, there are two ideas on how to distribute the cash. You might have heard the term Universal Basic Income (UBI), which would provide each to everyone.

When the Big State gathers all this money, there are two ideas on how to distribute the cash. You might have heard the term Universal Basic Income (UBI), which would provide each to everyone.

But another idea is Conditnail Basic Income (CBI) which would support a specific community. CBI avoids the drawback of UBI, which is unfairness because if everyone got money from the state, it would feel unfair to some people. It can lead to a split of communities and even conflict.

However, CBI would only go to people who meet certain criteria. It is a system that enables learners to share their wealth with individuals they really want to help.

This way, a better, more stable society could emerge, one where people had to work less but still felt support from strong communities around them.

The future of AI

The recent advantage of artificial intelligence generates a lot of hype, anxiety, and controversy. Much of what we currently call AI is based on neural network models. It is a process that uses strings of calculations to analyze massive amounts of data and identify patterns.

Automation will change the future of work more than people can imagine. When computers train themselves for jobs we thought were too hard for them, the labor market will transform immensely as human labor is mostly replaced. To support people who will face unemployment, the state should redistribute the income of high-income earners and capital owners throughout the wider population.

Many Businesses already use AI software to boost their productivity, but you can also use such programs to organize your data or generate complete new pictures and content with the help of tools like Writesonic.

Private companies and Governments use the technology to do everything from optimizing image searching and showing internet ads to diagnosing diseases and piloting autonomous aircraft.

Where AI research leads in the future is unclear, but many believe it will continue delivering revolutionary products.

One of the biggest concerns is Ai ethics which ensures that AI is used for the greater good and does not harm society or individuals. It is a subfield of ethics that concerns itself with the ethical implications of AI systems.

FAQ

What is artificial intelligence?

Artificial intelligence, or AI, is the simulation of human intelligence processes by computer systems. These processes include learning, reasoning, and self-correction.

How does AI work?

AI works by using algorithms and data to create patterns and models that can then be used to make decisions or predictions. The process of creating these models is known as machine learning, and it can be done through various techniques such as neural networks and deep learning.

What are the types of artificial intelligence?

There are two main types of AI: weak AI and strong AI. Weak AI, also known as narrow AI, is designed to perform a specific task or set of tasks, while strong AI, also known as artificial general intelligence (AGI), is designed to be as intelligent as a human being and can perform any intellectual task that a human can.

What is machine learning?

Machine learning is a type of AI that allows computer systems to learn and improve from experience without being explicitly programmed. This is done by using algorithms to analyze data and identify patterns, which can then be used to make predictions or decisions.

What is deep learning?

Deep learning is a subset of machine learning that uses neural networks with multiple layers to analyze and understand complex data. This allows it to identify patterns and make more accurate predictions than traditional machine learning algorithms.

What is a neural network?

A neural network is a type of machine learning algorithm that is designed to function like the human brain. It consists of interconnected nodes or neurons that process and transmit information.

What is the difference between weak AI and strong AI?

The main difference between weak AI and strong AI is that weak AI is designed to perform a specific task or set of tasks, while strong AI is designed to be as intelligent as a human being and can perform any intellectual task that a human can.

What are some applications of AI?

AI is used in a variety of applications, including computer vision, natural language processing, and learning algorithms. It is also being used in fields such as healthcare, finance, and transportation to improve efficiency and accuracy.

What is the role of AI in data science?

AI is an important aspect of data science, as it allows for the analysis of large amounts of data and the development of predictive models. It is used in areas such as machine learning and deep learning to identify patterns and make predictions.

How is AI being used today?

AI is being used in a wide range of fields and applications, including virtual assistants, fraud detection, and autonomous vehicles. It is also being used to improve healthcare outcomes by analyzing medical data and identifying potential treatments.

What is artificial intelligence (AI)?

Artificial intelligence, or AI, refers to the ability of computer systems to perform tasks that would normally require human intelligence, such as learning, reasoning, problem-solving, and pattern recognition.

How does AI work?

AI algorithms use computer science techniques to process large amounts of data and learn from it, allowing AI applications to analyze and identify patterns or make predictions. These algorithms can be trained using supervised learning, unsupervised learning, or reinforcement learning techniques.

What are the different types of AI?

AI can be classified into three types: narrow or weak AI, general or strong AI, and artificial superintelligence. Narrow AI is designed to perform specific, focused tasks, while general AI can perform any intellectual task that a human can. Artificial superintelligence, the most advanced form of AI, would have intellectual capabilities far beyond those of humans.

What are artificial neural networks and how are they used in AI?

Artificial neural networks (ANNs) are a subset of AI techniques that are designed to mimic the structure and function of biological neural networks, which are the basic units of the human brain. ANNs are used in AI models to recognize patterns and make decisions based on the input data.

Who coined the term “artificial intelligence”?

The term “artificial intelligence” was first coined by John McCarthy in 1956. McCarthy is considered one of the founding fathers of the field of artificial intelligence.

What are some examples of AI applications?

AI applications include speech recognition, image and object recognition, autonomous vehicles, robotics, chatbots, expert systems, and many others. AI is also used in various industries, such as healthcare, finance, and manufacturing, to improve efficiency and accuracy.

Does AI require human intervention?

It depends on the type of AI and the task it is performing. Some AI models require human input or guidance during training, while others can learn and make decisions independently.

What are some of the limitations of AI?

AI is still limited in its capabilities and often requires human intelligence for certain tasks, such as common sense reasoning and creativity. Additionally, AI can be biased or make incorrect decisions if the input data is flawed or incomplete.

How has AI evolved over time?

The first AI systems were developed in the 1950s and 1960s, but modern AI has only become possible with the advent of powerful computers and the development of advanced AI algorithms. Today, AI is still evolving and improving, with new applications and techniques being developed all the time.

How can AI be used to help society and solve problems?

AI can be applied to a wide range of societal problems, such as medical diagnosis, disaster response, environmental monitoring, and social welfare. AI can also help businesses and organizations improve efficiency and productivity, reduce costs, and develop new solutions to complex problems.